This is the multi-page printable view of this section. Click here to print.

Architecture

- 1: Design Overview

- 2: Trust Model

1 - Design Overview

Confidential computing projects are largely defined by what is inside the enclave and what is not. For Confidential Containers, the enclave contains the workload pod and helper processes and daemons that facilitate the workload pod. Everything else, including the hypervisor, other pods, and the control plane, is outside of the enclave and untrusted. This division is carefully considered to balance TCB size and sharing.

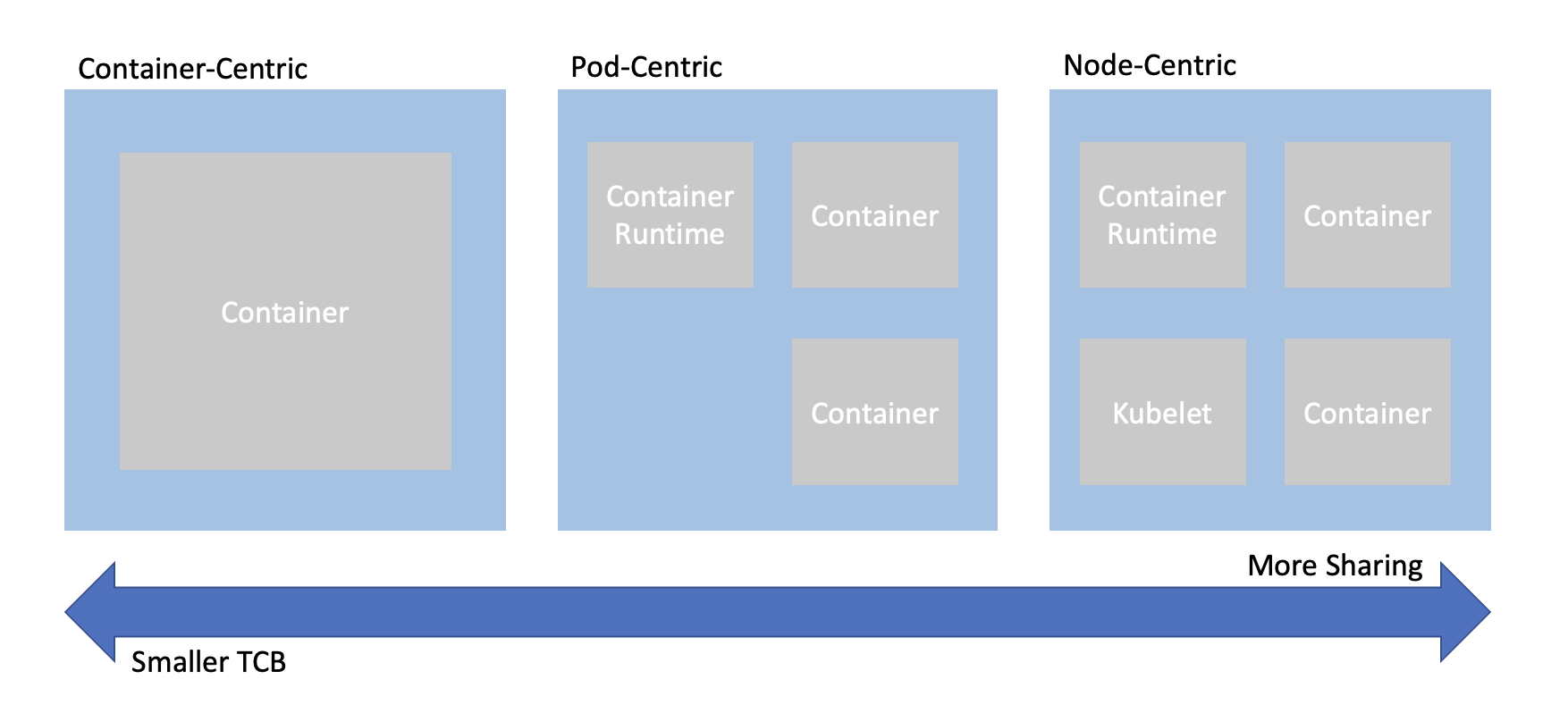

When trying to combine confidential computing and cloud native computing, often the first thing that comes to mind is either to put just one container inside of an enclave, or to put an entire worker node inside of the an enclave. This is known as container-centeric virtualization or node-centric virtualization. Confidential Containers opts for a compromise between these approaches which avoids some of their pitfalls. Specifically, node-centric approaches tend to have a large TCB that includes components such as the Kubelet. This makes the attack surface of the confidential guest significantly larger. It is also difficult to implement managed clusters in node-centric approaches because the workload runs in the same context as the rest of the cluster. On the other hand, container-centric approaches can support very little sharing of resources. Sharing is a loose term, but one example is two containers that need to share information over the network. In a container-centric approach this traffic would leave the enclave. Protecting the traffic would add overhead and complexity.

Confidential Containers takes a pod-centric approach which balances TCB size and sharing. While Confidential Containers does have some daemons and processes inside the enclave, the API of the guest is relatively small. Furthermore the guest image is static and generic across workloads and even platforms, making it simpler to ensure security guarantees. At the same time, sharing between containers in the same pod is easy. For example, the pod network namespace doesn’t leave the enclave, so containers can communicate confidentially on it without additional overhead. These are just a few of the reasons why pod-centric virtualization seems to be the best way to provide confidential cloud native computing.

Kata Containers

Confidential Containers and Kata Containers are closely linked, but the relationship might not be obvious at first. Kata Containers is an existing open source project that encapsulates pods inside of VMs. Given the pod-centric design of Confidential Containers this is a perfect match. But if Kata runs pods inside of VM, why do we need the Confidential Containers project at all? There are crucial changes needed on top of Kata Containers to preserve confidentiality.

Image Pulling

When using Kata Containers container images are pulled on the worker node with the help of a CRI runtime like containerd.

The images are exposed to the guest via filesystem passthrough.

This is not suitable for confidential workloads because the container images are exposed to the untrusted host.

With Confidential Containers images are pulled and unpacked inside of the guest.

This requires additional components such as image-rs to be part of the guest rootfs.

These components are beyond the scope of traditional Kata deployments and live in the Confidential Containers

guest components repository.

On the host, we use a snapshotter to pre-empt image pull and divert control flow to image-rs inside the guest.

sequenceDiagram

kubelet->>containerd: create container

containerd->>nydus snapshotter: load container snapshot

nydus snapshotter->>image-rs: download container

kubelet->>containerd: start container

containerd->>kata shim: start container

kata shim->>kata agent: start container

The above is a simplified diagram showing the interaction of containerd, the nydus snapshotter, and image-rs. The diagram does not show the creation of the sandbox.

Attestation

Confidential Containers also provides components inside the guest and elsewhere to facilitate attestation.

Attestation is a crucial part of confidential computing and a direct requirement of many guest operations.

For example, to unpack an encrypted container image, the guest must retrieve a secret key.

Inside the guest the confidential-data-hub and attestation-agent handle operations involving

secrets and attestation.

Again, these components are beyond the scope of traditional Kata deployments and are located in the

guest components repository.

The CDH and AA use the KBS Protocol to communicate with an external, trusted entity. Confidential Containers provides Trustee as an attestation service and key management engine that validates the guest TCB and releases secret resources.

sequenceDiagram

workload->>CDH: request secret

CDH->>AA: get attestation token

AA->>KBS: attestation request

KBS->>AA: challenge

AA->>KBS: attestation

KBS->>AA: attestation token

AA->>CDH: attestation token

CDH->>KBS: secret request

KBS->>CDH: encrypted secret

CDH->>workload: secret

The above is a somewhat simplified diagram of the attestation process. The diagram does not show the details of how the workload interacts with the CDH.

Putting the pieces together

If we take Kata Containers and add guest image pulling and attestation, we arrive at the following diagram, which represents Confidential Containers.

flowchart TD

kubelet-->containerd

containerd-->kata-shim

kata-shim-->hypervisor

containerd-->nydus-snapshotter

subgraph guest

kata-agent-->pod

ASR-->CDH

CDH<-->AA

pod-->ASR

image-rs-->pod

image-rs-->CDH

end

subgraph pod

container-a

container-b

container-c

end

subgraph Trustee

KBS-->AS

AS-->RVPS

end

AA-->KBS

CDH-->KBS

nydus-snapshotter-->image-rs

hypervisor-->guest

kata-shim<-->kata-agent

image-rs<-->registry

Clouds and Nesting

Most confidential computing hardware does not support nesting. More specifically, a confidential guest cannot be started inside of a confidential guest, and with few exceptions a confidential guest cannot be started inside of a non-confidential guest. This poses a challenge for those who do not have access to bare metal machines or would like to have virtual worker nodes.

To alleviate this, Confidential Containers supports a deployment mode known as Peer Pods, where a component called the Cloud API Adaptor takes the place of a conventional hypervisor. Rather than starting a confidential podvm locally, the CAA reaches out to a cloud API. Since the podvm is no longer started locally the worker node can be virtualized. This also allows confidential containers to integrate with cloud confidential VM offerings.

Peer Pods deployments share most of the same properties that are described in this guide.

Process-based Isolation

Confidential Containers also supports SGX with enclave-cc. Because the Kata guest cannot be run as a single process, the design of enclave-cc is significantly different. In fact, enclave-cc doesn’t use Kata at all, but it does still represent a pod-centric approach with some sharing between containers even as they run in separate enclaves. enclave-cc does use some of the guest components as crates.

Components

Confidential Containers integrates many components. Here is a brief overview of most the components related to the project.

| Component | Repository | Purpose |

|---|---|---|

| Operator | operator | Installs Confidential Containers |

| Kata Shim | kata-containers/kata-containers | Starts PodVM and proxies requests to Kata Agent |

| Kata Agent | kata-containers/kata-containers | Sets up and runs the workload inside of a VM |

| image-rs | guest-componenents | Downloads and unpacks container images |

| ocicrypt-rs | guest-components | Decrypts encrypted container layers |

| confidential-data-hub | guest-components | Handles secret resources |

| attestation-agent | guest-components | Attests guest |

| api-server-rest | guest-components | Proxies requests from workload container to CDH |

| key-broker-service | Trustee | Coordinates attestation and secret delivery (relying party) |

| attestation-service | Trustee | Validate hardware evidence (verifier) |

| reference-value-provider-service | Trustee | Manages reference values |

| Nydus Snapshotter | containerd/nydus-snapshotter | Triggers guest image pulling |

| cloud-api-adaptor | cloud-api-adaptor | Starts PodVM in the cloud |

| agent-protocol-forwarder | cloud-api-adaptor | Forwards Kata Agent API from cloud API |

Component Dependencies

Many of the above components depend on each other either directly in the source, during packaging, or at runtime. The basic premise is that the operator deploys a special configuration of Kata containers that uses a rootfs (build by the Kata CI) that includes the guest components. This diagram shows these relationships in more detail. The diagram does not capture runtime interactions.

flowchart LR

Trustee --> Versions.yaml

Guest-Components --> Versions.yaml

Kata --> kustomization.yaml

Guest-Components .-> Client-tool

Guest-Components --> enclave-agent

enclave-cc --> kustomization.yaml

Guest-Components --> versions.yaml

Trustee --> versions.yaml

Kata --> versions.yaml

subgraph Kata

Versions.yaml

end

subgraph Guest-Components

end

subgraph Trustee

Client-tool

end

subgraph enclave-cc

enclave-agent

end

subgraph Operator

kustomization.yaml

reqs-deploy

end

subgraph cloud-api-adaptor

versions.yaml

end

Workloads

Confidential Containers provides a set of primitives for building confidential Cloud Native applications. For instance, it allows a pod to be run inside of a confidential VM, it handles encrypted and signed container image, sealed secrets, and other features described in the features section. This does not guarantee that any application run with Confidential Containers is confidential or secure. Users deploying applications with Confidential Containers should understand the attack surface and security applications of their workloads, focusing especially on APIs that cross the confidential trust boundary.

2 - Trust Model

2.1 - Trust Model for Confidential Containers

Confidential Containers mainly relies on VM enclaves, where the guest does not trust the host. Confidential computing, and by extension Confidential Containers, provides technical assurances that the untrusted host cannot access guest data or manipulate guest control flow.

Trusted

Confidential Containers maps pods to confidential VMs, meaning that everything inside a pod is

within an enclave. In addition to the workload pod, the guest also contains helper processes

and daemons to setup and control the pod.

These include the kata-agent, and guest components as described in the architecture section.

More specifically, the guest is defined as four components.

- Guest firmware

- Guest kernel

- Guest kernel command line

- Guest root filesystem

All platforms supported by Confidential Containers must measure these four components. Details about the mechanisms for each platform are below.

Note that the hardware measurement usually does not directly cover the workload containers. Instead, containers are covered by a second-stage of measurement that uses generic OCI standards such as signing. This second stage of measurement is rooted in the trust of the first stage, but decoupled from the guest image.

Confidential Containers also relies on an external trusted entity, usually Trustee, to attest the guest.

Untrusted

Everything on the host outside of the enclave is untrusted. This includes the Kubelet, CRI runtimes like containerd, the host kernel, the Kata Shim, and more.

Since the Kubernetes control plane is untrusted, some traditional Kubernetes security techniques are not relevant to Confidential Containers without special considerations.

Crossing the trust boundary

In confidential computing careful scrutiny is required whenever information crosses the boundary between the trusted and untrusted contexts. Secrets should not leave the enclave without protection and entities outside of the enclave should not be able to trigger malicious behavior inside the guest.

In Confidential Containers there are APIs that cross the trust boundary. The main example is the API between the Kata Agent in the guest and the Kata Shim on the host. This API is protected with an OPA policy running inside the guest that can block malicious requests by the host.

Note that the kernel command line, which is used to configure the Kata Agent, does not cross the trust boundary because it is measured at boot. Assuming that the guest measurement is validated, the APIs that are most significant are those that are not measured by the hardware.

Quantifying the attack surface of an API is non-trivial. The Kata Agent can perform complex operations such as mounting a block device provided by the host. In the case that a host-provided device is attached to the guest the attack surface is extended to any information provided by this device. It’s also possible that any of the code used to implement the API inside the guest has a bug in it. As the complexity of the API increases, the likelihood of a bug increases. The nuances of the Kata Agent API is why Confidential Containers relies on a dynamic and user-configurable policy to either block endpoints entirely or only allow particular types of requests to be made. For example, the policy can be used to make sure that a block device is mounted only to a particular location.

Applications deployed with Confidential Containers should also be aware of the trust boundary. An application running inside of an enclave is not secure if it exposes a dangerous API to the outside world. Confidential applications should almost always be deployed with signed and/or encrypted images. Otherwise the container image itself can be considered as part of the unmeasured API.

Out of Scope

Some attack vectors are out of scope of confidential computing and Confidential Containers. For instance, confidential computing platforms usually do not protect against hardware side-channels. Neither does Confidential Containers. Different hardware platforms and platform generations may have different guarantees regarding properties like memory integrity. Confidential Containers inherits the properties of whatever TEE it is using.

Confidential computing does not protect against denial of service. Since the untrusted host is in charge of scheduling, it can simply not run the guest. This is true for Confidential Containers as well. In Confidential Containers the untrusted host can avoid scheduling the pod VM and the untrusted control plane can avoid scheduling the pod. These are seen as equivalent.

In general orchestration is untrusted in Confidential Containers. Confidential Containers provides few guarantees about where, when, or in what order workloads run, besides that the workload is deployed inside of a genuine enclave containing the expected software stack.

Cloud Native Personas

So far the trust model has been described in terms of a host and a guest, following from the underlying confidential computing trust model, but these terms are not used in cloud native computing. How do we understand the trust model in terms of cloud native personas? Confidential Containers is a flexible project. It does not explicitly define how parties should interact. but some possible arrangements are described in the personas section.

Measurement Details

As mentioned above, all hardware platforms must measure the four components representing the guest image. This table describes how each platform does this.

| Platform | Firmware | Kernel | Command Line | Rootfs |

|---|---|---|---|---|

| SEV-SNP | Pre-measured by ASP | Measured direct boot via OVMF | Measured direct boot | Measured direct boot |

| TDX | Pre-launch measurement | RTMR | RTMR | Dm-verity hash provided in command line |

| SE | Included in encrypted SE image | included in SE image | included in SE image | included in SE image |

See Also

- Confidential Computing Consortium (CCC) published “A Technical Analysis of Confidential Computing” section 5 of which defines the threat model for confidential computing.

- CNCF Security Technical Advisory Group published “Cloud Native Security Whitepaper”

- Kubernetes provides documentation : “Overview of Cloud Native Security”

- Open Web Application Security Project - “Docker Security Threat Modeling”

2.2 - Personas

Personas

Otherwise referred to as actors or agents, these are individuals or groups capable of carrying out a particular threat. In identifying personas we consider :

- The Runtime Environment, Figure 5, Page 19 of CNCF Cloud Native Security Paper. This highlights three layers, Cloud/Environment, Workload Orchestration, Application.

- The Kubernetes Overview of Cloud Native Security identifies the 4C’s of Cloud Native Security as Cloud, Cluster, Container and Code. However data is core to confidential containers rather than code.

- The Confidential Computing Consortium paper A Technical Analysis of Confidential Computing defines Confidential Computing as the protection of data in use by performing computations in a hardware-based Trusted Execution Environment (TEE).

In considering personas we recognise that a trust boundary exists between each persona and we explore how the least privilege principle (as described on Page 40 of Cloud Native Security Paper ) should apply to any actions which cross these boundaries.

Confidential containers can provide enhancements to ensure that the expected code/containers are the only code that can operate over the data. However any vulnerabilities within this code are not mitigated by using confidential containers, the Cloud Native Security Whitepaper details Lifecycle aspects that relate to the security of the code being placed into containers such as Static/Dynamic Analysis, Security Tests, Code Review etc which must still be followed.

Any of these personas could attempt to perform malicious actions:

Infrastructure Operator

This persona has privileges within the Cloud Infrastructure which includes the hardware and firmware used to provide compute, network and storage to the Cloud Native solution. They are responsible for availability of infrastructure used by the cloud native environment.

- Have access to the physical hardware.

- Have access to the processes involved in the deployment of compute/storage/memory used by any orchestration components and by the workload.

- Have control over TEE hardware availability/type.

- Responsibility for applying firmware updates to infrastructure including the TEE Technology.

Examples: Cloud Service Provider (CSP), Site Reliability Engineer, etc. (SRE)

Orchestration Operator

This persona has privileges within the Orchestration/Cluster. They are responsible for deploying a solution into a particular cloud native environment and managing the orchestration environment. For managed cluster this would also include the administration of the cluster control plane.

- Control availability of service.

- Control webhooks and deployment of workloads.

- Control availability of cluster resources (data/networking/storage) and cluster services (Logging/Monitoring/Load Balancing) for the workloads.

- Control the deployment of runtime artifacts required by the TEE during initialisation, before hosting the confidential workload.

Example: A Kubernetes administrator responsible for deploying pods to a cluster and maintaining the cluster.

Workload Provider

This persona designs and creates the orchestration objects comprising the solution (e.g. Kubernetes Pod spec, etc). These objects reference containers published by Container Image Providers. In some cases the Workload and Container Image Providers may be the same entity. The solution defined is intended to provide the Application or Workload which in turn provides value to the Data Owners (customers and clients). The Workload Provider and Data Owner could be part of same company/organisation but following the least privilege principle the Workload Provider should not be able to view or manipulate end user data without informed consent.

- Need to prove to customer aspects of compliance.

- Defines what the solution requires in order to run and maintain compliance (resources, utility containers/services, storage).

- Chooses the method of verifying the container images (from those supported by Container Image Provider) and obtains artifacts needed to allow verification to be completed within the TEE.

- Provide the boot images initially required by the TEE during initialisation or designates a trusted party to do so.

- Provide the attestation verification service, or designate a trusted party to provide the attestation verification service.

Examples: 3rd party software vendor, CSP

Container Image Provider

This persona is responsible for the part of the supply chain that builds container images and provides them for use by the solution. Since a workload can be composed of multiple containers, there may be multiple container image providers, some will be closely connected to the workload provider (business logic containers), others more independent to the workload provider (side car containers). The container image provider is expected to use a mechanism to allow provenance of container image to be established when a workload pulls in these images at deployment time. This can take the form of signing or encrypting the container images.

- Builds container images.

- Owner of business logic containers. These may contain proprietary algorithms, models or secrets.

- Signs or encrypts the images.

- Defines the methods available for verifying the container images to be used.

- Publishes the signature verification key (public key).

- Provides any decryption keys through a secure channel (generally to a key management system controlled by a Key Broker Service).

- Provides other required verification artifacts (secure channel may be considered).

- Protects the keys used to sign or encrypt the container images.

It is recognised that hybrid options exist surrounding workload provider and container provider. For example the workload provider may choose to protect their supply chain by signing/encrypting their own container images after following the build patterns already established by the container image provider.

Example : Istio

Data Owner

Owner of data used, and manipulated by the application.

- Concerned with visibility and integrity of their data.

- Concerned with compliance and protection of their data.

- Uses and shares data with solutions.

- Wishes to ensure no visibility or manipulation of data is possible by Orchestration Operator or Cloud Operator personas.

Discussion

Data Owner vs. All Other Personas

The key trust relationship here is between the Data Owner and the other personas. The Data Owner trusts the code in the form of container images chosen by the Workload Provider to operate across their data, however they do not trust the Orchestration Operator or Cloud Operator with their data and wish to ensure data confidentiality.

Workload Provider vs. Container Image Provider

The Workload Provider is free to choose Container Image Providers that will provide not only the images they need but also support the verification method they require. A key aspect to this relationship is the Workload Provider applying Supply Chain Security practices (as described on Page 42 of Cloud Native Security Paper ) when considering Container Image Providers. So the Container Image Provider must support the Workload Providers ability to provide assurance to the Data Owner regarding integrity of the code.

With Confidential Containers we match the TEE boundary to the most restrictive boundary which is between the Workload Provider and the Orchestration Operator.

Orchestration Operator vs. Infrastructure Operator

Outside the TEE we distinguish between the Orchestration Operator and the Infrastructure Operator due to nature of how they can impact the TEE and the concerns of Workload Provider and Data Owner. Direct threats exist from the Orchestration Operator as some orchestration actions must be permitted to cross the TEE boundary otherwise orchestration cannot occur. A key goal is to deprivilege orchestration and restrict the Orchestration Operators privileges across the boundary. However indirect threats exist from the Infrastructure Operator who would not be permitted to exercise orchestration APIs but could exploit the low-level hardware or firmware capabilities to access or impact the contents of a TEE.

Workload Provider vs. Data Owner

Inside the TEE we need to be able to distinguish between the Workload Provider and Data Owner in recognition that the same workload (or parts such as logging/monitoring etc) can be re-used with different data sets to provide a service/solution. In the case of bespoke workload, the workload provider and Data Owner may be the same persona. As mentioned the Data Owner must have a level of trust in the Workload Provider to use and expose the data provided in an expected and approved manner. Page 10 of A Technical Analysis of Confidential Computing , suggests some approaches to establish trust between them.

The TEE boundary allows the introduction of secrets but just as we recognised the TEE does not provide protection from code vulnerabilities, we also recognised that a TEE cannot enforce complete distrust between Workload Provider and Data Owner. This means secrets within the TEE are at risk from both Workload Provider and Data Owner and trying to keep secrets which protect the workload (container encryption etc), separated from secrets to protect the data (data encryption) is not provided simply by using a TEE.

Recognising that Data Owner and Workload Provider are separate personas helps us to identify threats to both data and workload independently and to recognise that any solution must consider the potential independent nature of these personas. Two examples of trust between Data Owner and Workload Provider are :

- AI Models which are proprietary and protected requires the workload to be encrypted and not shared with the Data Owner. In this case secrets private to the Workload Provider are needed to access the workload, secrets requiring access to the data are provided by the Data Owner while trusting the workload/model without having direct access to how the workload functions. The Data Owner completely trusts the workload and Workload Provider, whereas the Workload Provider does not trust the Data Owner with the full details of their workload.

- Data Owner verifies and approves certain versions of a workload, the workload provides the data owner with secrets in order to fulfil this. These secrets are available in the TEE for use by the Data Owner to verify the workload, once achieved the data owner will then provide secrets and data into the TEE for use by the workload in full confidence of what the workload will do with their data. The Data Owner will independently verify versions of the workload and will only trust specific versions of the workload with the data whereas the Workload Provider completely trusts the Data Owner.

Data Owner vs. End User

We do not draw a distinction between data owner and end user though we do recognise that in some cases these may not be identical. For example data may be provided to a workload to allow analysis and results to be made available to an end user. The original data is never provided directly to the end user but the derived data is, in this case the data owner can be different from the end user and may wish to protect this data from the end user.